AI chatbots could help patients discuss sensitive health conditions

- 21 December 2021

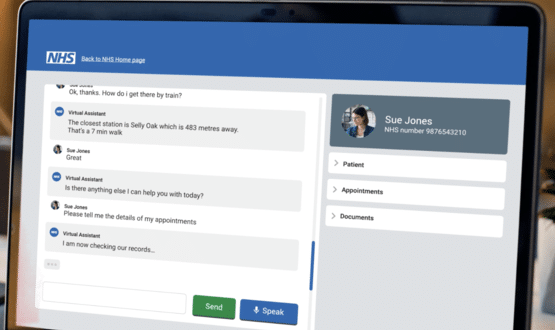

Research from the University of Westminster has revealed AI chatbots could be used to get people to open up about sensitive health conditions, in comparison to discussing them with healthcare professionals.

Researchers from the university collaborated with a team from University College London to determine how perceived stigma and severity of health conditions relates to the acceptability of health information from different sources.

The team found that when it came to highly stigmatising health conditions – such as STIs – an artificial intelligence (AI) chatbot was favoured over a GP. However, for severe health conditions, like cancer, patients would prefer to speak to a GP for health advice.

Overall, healthcare professionals were perceived to be the most desirable source of health information, however the research highlighted that chatbots could help encourage patients to speak up about conditions that they don’t feel comfortable discussing with their GP.

The researchers noted that AI technology does have it limitations in healthcare – particularly when input is required from patients, but suggests that further research could help establish a set of health topics most suitable for chatbot-led interventions.

Dr Tom Nadarzynski, lead author of the study at the University of Westminster, said: “Many AI developers need to assess whether their AI-based healthcare tools, such as symptoms checkers or risk calculators are acceptable interventions.

“Our research finds that patients value the opinion of healthcare professionals, therefore implementation of AI in healthcare may not be suitable in all cases, especially for serious illnesses.”

The research paper ‘Health chatbots acceptability moderated by perceived stigma and severity: a cross-sectional survey’ – was published in SAGE Digital Health journal earlier this month.

In October the University of Westminster received a share of £1.4m funding from the NHS AI Lab and the Health Foundation to explore how AI could address racial and ethical health inequalities.